The creation of the bot-tester for match-3 games

In the process of working on match-3 project sooner or later the question arises — how to evaluate the complexity of the created levels and the overall balance of the game?

Even with a large number of testers in the team, to collect detailed statistics for each level (and in modern games there are hundreds of them) is simply unrealistic. It is obvious that the testing process need to automate.

Below is the story of how we did it in our indie матч3 game at which we finish the work. (Caution — rag!)

For automated testing, we decided to program a “bot” that would meet the following requirements:

linking to the game engine — the bot needs to “enjoy” the same code blocks, which are utilized during a standard game. In this case, you can be confident that a bot and a real player will play the same game (i.e. will deal with the same logic, mechanics, bugs and other things).

Scalability — you need to bot to test not only existing levels, but the levels that can be created in the future (given that in the future the game can be added new types of chips, cells, enemies, etc.). This requirement largely overlaps with the previous one.

Capacity — to collect statistics on one level you need to “play” in it at least 1000 times, and levels of a hundred or so bot needs to play and gather statistics quickly enough that the analysis of one level does not cover the entire day.

Authenticity — moves the bot should be more or less close to the average player.

The first three points speak for themselves, but how to make bot to play “properly”?

Before you begin to work on our project, we were about 1000 levels of varying difficulty in different матч3 games (two or three games even gone entirely). And considering this experience, we decided to highlight the following approach to creating levels.

Immediately say, that this decision does not purport to be the ultimate truth, and not without flaws, but it has helped us to build the bot meets the requirements described above.

We decided to assume that a good level of this kind of task for finding the optimal strategy — level designer creating the level “thinks” the optimal sequence of actions, and the player must guess. If he guesses correctly, then the level is held relatively easily. If the player has a “out of order”, then he likely falls into a hopeless situation.

For example, suppose that we are given a level in which there are “breeding” chips (if not to gather a group of chips next to them, before the next player's turn they take one of their neighboring cells). The aim of a level to pull certain pieces in the bottom row of the Board. Our game is dedicated to marine adventure (yeah, again!) so you want to pull on the bottom of the divers, and the role of multiplying chips are the corals:

If the player does not spend time destroying corals from the beginning, and instead will immediately try to pull the divers, it is likely that corals will grow and make the level impassable. Therefore, in this case we can say that the strategy that “wished for” level-designer, is approximately as follows: “as soon As possible to destroy the coral, and then pull divers.”

Thus, the strategy of passing level from the level designer, and on the part of the player is to prioritize between different tactical actions (I'll call them tactics). Set these tactics are roughly the same for all games of the genre:

the

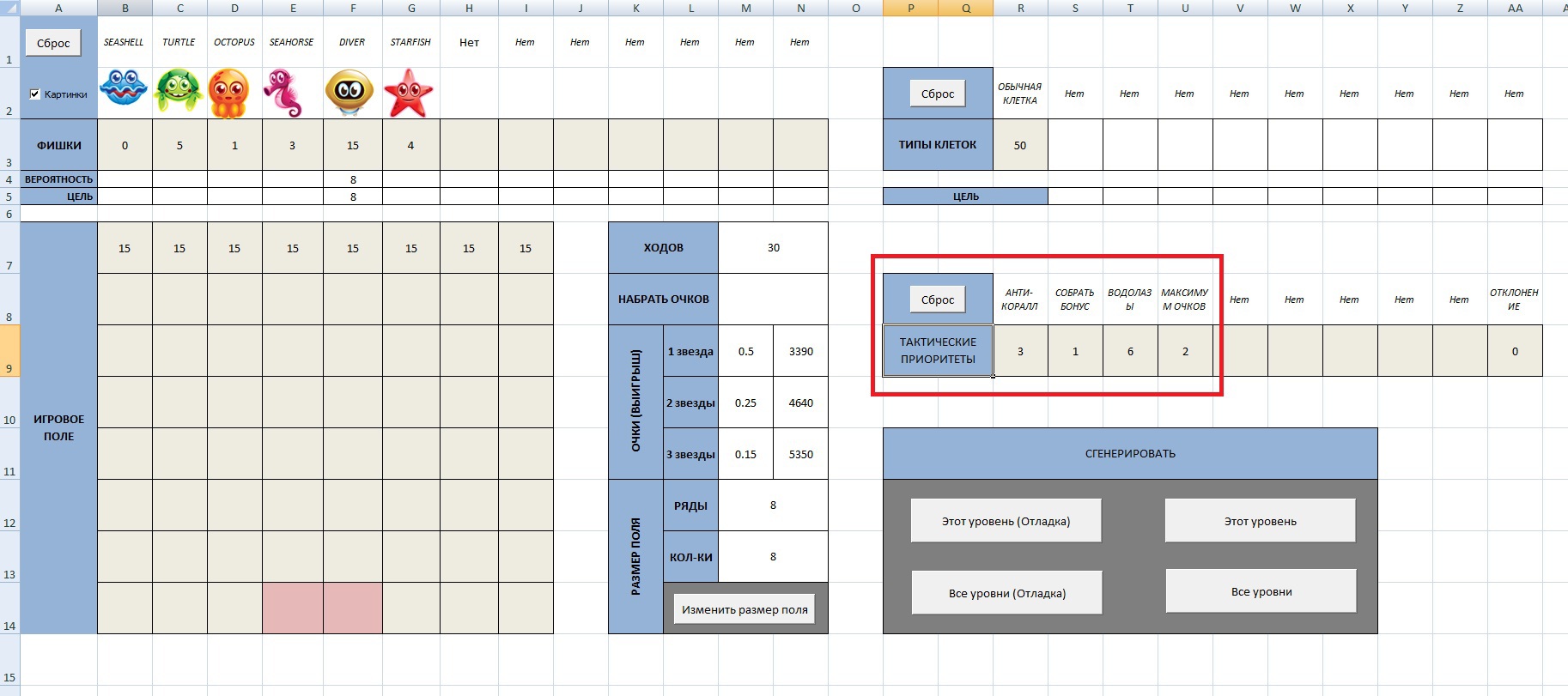

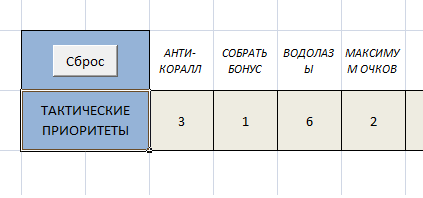

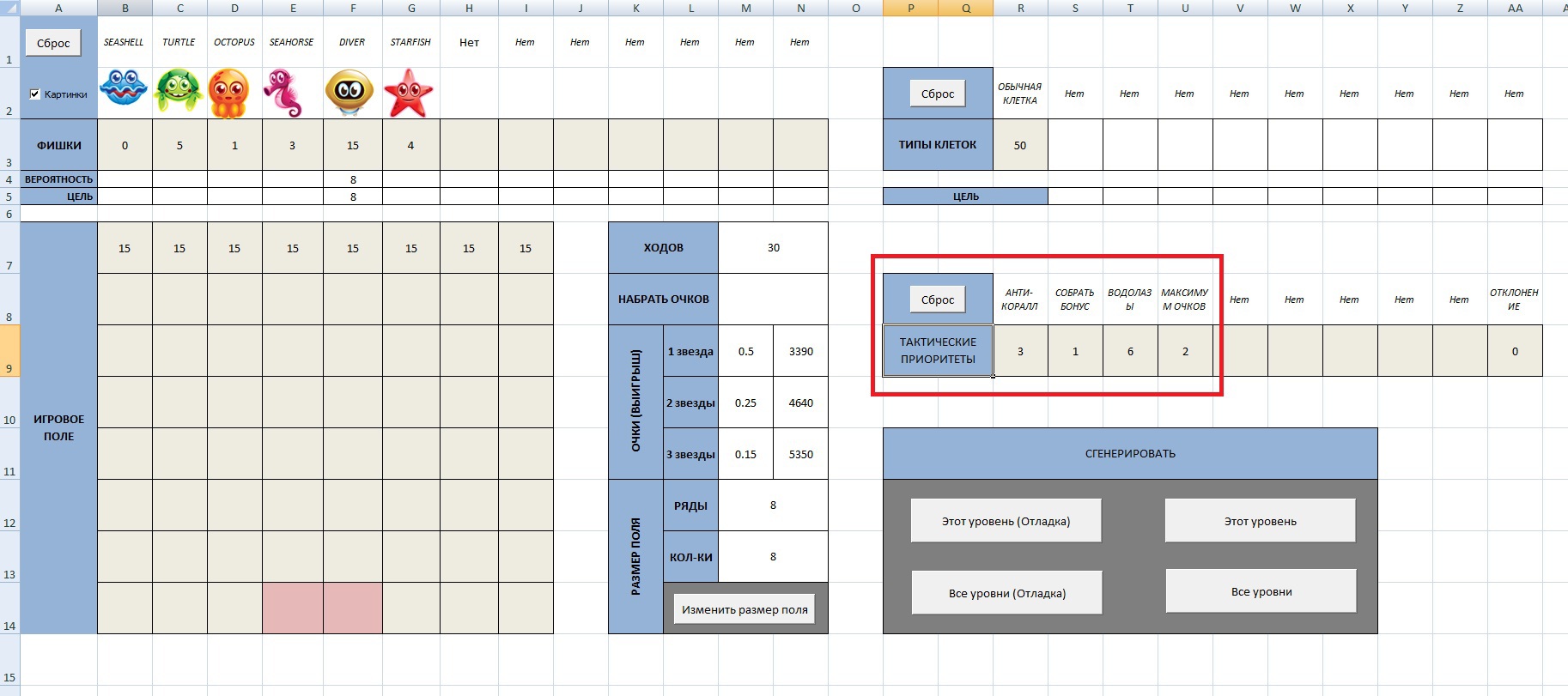

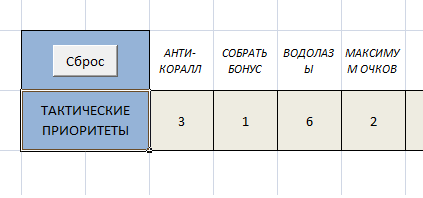

Placing these priorities for each level, we added to our level editor on the basis of Excel:

Level designer creates a level and sets tactical priorities for the bot — generated XML file will contain all the necessary information about the level and how to test it. As seen in the picture, to solve the level assumes the following priorities:

Bot when testing level must take account of these priorities and to choose the appropriate moves. Having defined the strategy, program bot is already difficult.

Bot works in the following simple algorithm:

The task of determining whether the tactical priority here in detail will not be considered. In short — when you build the array of possible moves stores the settings for each move (how many chips were collected, the type of the chips was “created” bonus-feature, how many points, etc.) and then these parameters are checked against the priorities.

After the bot “plays” level the specified number of times, it displays the statistics results: the number of times the level has been won, the average number of moves it took, how many points, etc. Based on this information it will be possible to decide whether the difficulty level desired balance. For example, a part of the printout by level is shown in the picture above:

It is seen that the level is lost in 63% of cases. A quantitative assessment of the level at which you rely upon when balancing and defining the levels in the game.

Above we assumed that the player selecting the next move analyzes the in this position the moves and one chooses the one that's best suited for most priority at the moment tactics. And based on this assumption, we asked the logic for the bot.

But there are just two assumptions:

the

It turns out, the bot based on these assumptions will not play as average, but as a “best” player, perfectly familiar with all the intricacies of the game. And focus on the statistics collected this bot is impossible. What do you do?

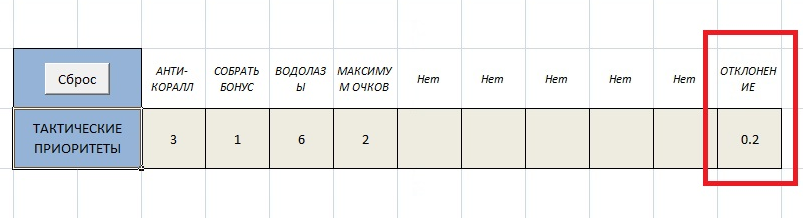

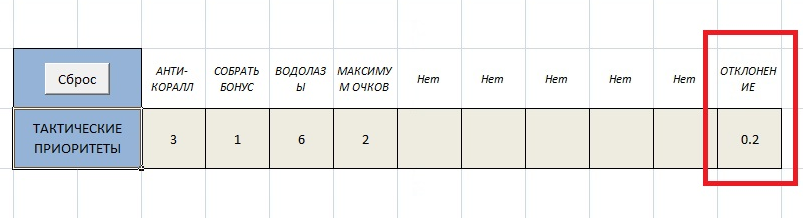

It is obvious that we need to introduce some correction factor, which would make the actions of the bot less “perfect”. We decided to stay on a simple option is to make part of the moves the bot just random.

The coefficient determines the number of random moves defines again a level-designer based on their goals. We called this factor “a deviation from the strategy” — ask him for that level equal to 0.2:

Deviation of 0.2 means that with a probability of 0.2 (or 20% of the time) the bot just randomly picks a move available on the Board. See how to change the statistics level if such a deviation (the previous statistics are calculated under the deviation equal to zero — i.e., the bot absolutely should have specified priorities):

The percentage of lost levels expected grew by 15% (from 63 to 78). The average completeness of a lost level (percentage lowered to the bottom divers) is also expected to fall. But the average number of moves when winning, curiously, has not changed.

Statistics show that this level refers to the relatively complex: 24 of 30 moves should be well thought out (30 * 0.2 = 6 moves can be made “in vain”), and even in this case, in 78% of cases the player loses.

The question remains — where did the factor of 0.2 for this level? What to take the coefficients for the other levels? We decided to leave it to the discretion of the level designer.

The meaning of this coefficient is very simple: “the number of ill-considered moves that can make a player at this level”. If you need a simple level for the initial stage of the game, which should be easily passed by any player, it is possible to balance the level of this coefficient equal to 0.9 or even 1. If you need difficult or very difficult level for the passage of which the player must exert maximum efforts and skills, balancing can be carried out with small or even zero deviation from the optimal strategy.

And finally, a few words about speed.

Bot we did part of the game engine, depending on the exhibited flag, the program either waits for the players, or the moves made by the bot.

The first version of the bot is quite slow for 1000 runs level of 30 moves took more than an hour, despite the fact that the test mode was turned off all graphical effects and the chips were moving between the cells instantly.

Since all game logic is tied to the rendering cycle, but it is limited 60ю frames per second, for acceleration of the tester, it was decided to disable vertical sync and “let go” of FPS. We are using LibGDX framework, in which it's done (maybe useful to someone):

After that, the bot smartly ran at speeds of nearly 1000 fps! For most levels, this is enough to make 1000 “runs” of less than 5 minutes. Honestly, it would be even faster, but this is a working set.

You can watch a video of the bot (sorry, when you record a video the FPS drops to about 120)

In the end, have the boat tied to the game engine — no need to maintain separate code tester.

If in the future the game will add new mechanics, then the bot will be easy to teach them test — will only need to add in a level editor IDs for new tactics and enter the code in additional parameters in the analysis of moves.

The hardest, of course, to evaluate how the behavior of the bot matches the behavior of the average player. But it will be a weakness of any approach to the construction of an automatic tester, so testing on living people (especially the target audience) no one, of course, not canceled.

Article based on information from habrahabr.ru

Even with a large number of testers in the team, to collect detailed statistics for each level (and in modern games there are hundreds of them) is simply unrealistic. It is obvious that the testing process need to automate.

Below is the story of how we did it in our indie матч3 game at which we finish the work. (Caution — rag!)

problem Statement

For automated testing, we decided to program a “bot” that would meet the following requirements:

linking to the game engine — the bot needs to “enjoy” the same code blocks, which are utilized during a standard game. In this case, you can be confident that a bot and a real player will play the same game (i.e. will deal with the same logic, mechanics, bugs and other things).

Scalability — you need to bot to test not only existing levels, but the levels that can be created in the future (given that in the future the game can be added new types of chips, cells, enemies, etc.). This requirement largely overlaps with the previous one.

Capacity — to collect statistics on one level you need to “play” in it at least 1000 times, and levels of a hundred or so bot needs to play and gather statistics quickly enough that the analysis of one level does not cover the entire day.

Authenticity — moves the bot should be more or less close to the average player.

The first three points speak for themselves, but how to make bot to play “properly”?

Strategy bot. First approach

Before you begin to work on our project, we were about 1000 levels of varying difficulty in different матч3 games (two or three games even gone entirely). And considering this experience, we decided to highlight the following approach to creating levels.

Immediately say, that this decision does not purport to be the ultimate truth, and not without flaws, but it has helped us to build the bot meets the requirements described above.

We decided to assume that a good level of this kind of task for finding the optimal strategy — level designer creating the level “thinks” the optimal sequence of actions, and the player must guess. If he guesses correctly, then the level is held relatively easily. If the player has a “out of order”, then he likely falls into a hopeless situation.

For example, suppose that we are given a level in which there are “breeding” chips (if not to gather a group of chips next to them, before the next player's turn they take one of their neighboring cells). The aim of a level to pull certain pieces in the bottom row of the Board. Our game is dedicated to marine adventure (yeah, again!) so you want to pull on the bottom of the divers, and the role of multiplying chips are the corals:

If the player does not spend time destroying corals from the beginning, and instead will immediately try to pull the divers, it is likely that corals will grow and make the level impassable. Therefore, in this case we can say that the strategy that “wished for” level-designer, is approximately as follows: “as soon As possible to destroy the coral, and then pull divers.”

Thus, the strategy of passing level from the level designer, and on the part of the player is to prioritize between different tactical actions (I'll call them tactics). Set these tactics are roughly the same for all games of the genre:

the

-

the

- to assemble as many chips (points) the

- to destroy “nuisance” chips\cells the

- to create bonus chips the

- to attack “boss” the

- to pull some chips down the

- and more...

Placing these priorities for each level, we added to our level editor on the basis of Excel:

Level designer creates a level and sets tactical priorities for the bot — generated XML file will contain all the necessary information about the level and how to test it. As seen in the picture, to solve the level assumes the following priorities:

-

the

- Destruction of corals (ANTI-CORAL) the

- Creation bonus (COLLECT BONUS) the

- the Descent of divers (DIVERS) the

- to select the most valuable moves (MAXIMUM POINTS)

Bot when testing level must take account of these priorities and to choose the appropriate moves. Having defined the strategy, program bot is already difficult.

Algorithm bot

Bot works in the following simple algorithm:

-

the

- to Load a list of priorities for level from XML file the

- Save all possible at the moment moves in an array the

- to Take the next priority from the list the

- to Check if the array moves corresponding to the current priority the

- If there is, then choose the best move the

- If no, GOTO 3. the

- After the move is made, GOTO 2

The task of determining whether the tactical priority here in detail will not be considered. In short — when you build the array of possible moves stores the settings for each move (how many chips were collected, the type of the chips was “created” bonus-feature, how many points, etc.) and then these parameters are checked against the priorities.

After the bot “plays” level the specified number of times, it displays the statistics results: the number of times the level has been won, the average number of moves it took, how many points, etc. Based on this information it will be possible to decide whether the difficulty level desired balance. For example, a part of the printout by level is shown in the picture above:

STATISTICS LEVEL:

====================================

LIMIT MOVES: 30

The NUMBER of iterations: 1000

The PERCENTAGE of losses of: 63 % (630 RUNS)

The AVERAGE COMPLETENESS of a LOST LEVEL: 61 %

The AVERAGE NUMBER of MOVES WHEN a WIN: 24

------------------------------------

POINTS:

-------------------

MINIMUM SCORE: 2380

MAXIMUM SCORE: 7480

-------------------

------------------------------------

STATISTICS RUNS:

-------------------

0. LOSS (87.0 % has ended)

1. LOSS (12.0 % has ended)

2. LOSS (87.0 % has ended)

3. LOSS (87.0 % has ended)

4. LOSS (87.0 % has ended)

5. WIN (LEVEL COMPLETED 26 MOVES. 2 STARS COLLECTED.)

6. LOSS (75.0 % has ended)

...

It is seen that the level is lost in 63% of cases. A quantitative assessment of the level at which you rely upon when balancing and defining the levels in the game.

And who said that the player will act in this way?

Above we assumed that the player selecting the next move analyzes the in this position the moves and one chooses the one that's best suited for most priority at the moment tactics. And based on this assumption, we asked the logic for the bot.

But there are just two assumptions:

the

-

the

- Real player is unlikely to analyses all the available the moves — rather, it selects the first found more or less reasonable speed. And even if he tries to consider all the moves on the Board, he may simply not notice a good move.

the - the Player isn't always able to identify and properly prioritize — to do this you need good knowledge of a particular game and its mechanic, so it cannot be expected of optimal actions for each course. (Although this is less of a problem because the player will learn from their mistakes and in the end will understand that it is important to pass the level, and what can wait).

It turns out, the bot based on these assumptions will not play as average, but as a “best” player, perfectly familiar with all the intricacies of the game. And focus on the statistics collected this bot is impossible. What do you do?

Strategy bot. Second approximation

It is obvious that we need to introduce some correction factor, which would make the actions of the bot less “perfect”. We decided to stay on a simple option is to make part of the moves the bot just random.

The coefficient determines the number of random moves defines again a level-designer based on their goals. We called this factor “a deviation from the strategy” — ask him for that level equal to 0.2:

Deviation of 0.2 means that with a probability of 0.2 (or 20% of the time) the bot just randomly picks a move available on the Board. See how to change the statistics level if such a deviation (the previous statistics are calculated under the deviation equal to zero — i.e., the bot absolutely should have specified priorities):

STATISTICS LEVEL:

====================================

LIMIT MOVES: 30

The NUMBER of iterations: 1000

The PERCENTAGE of losses of: 78 % (780 RUNS)

The AVERAGE COMPLETENESS of a LOST LEVEL: 56 %

The AVERAGE NUMBER of MOVES WHEN a WIN: 24

------------------------------------

POINTS:

-------------------

MINIMUM SCORE: 2130

MAXIMUM SCORE: 7390

-------------------

...

The percentage of lost levels expected grew by 15% (from 63 to 78). The average completeness of a lost level (percentage lowered to the bottom divers) is also expected to fall. But the average number of moves when winning, curiously, has not changed.

Statistics show that this level refers to the relatively complex: 24 of 30 moves should be well thought out (30 * 0.2 = 6 moves can be made “in vain”), and even in this case, in 78% of cases the player loses.

The question remains — where did the factor of 0.2 for this level? What to take the coefficients for the other levels? We decided to leave it to the discretion of the level designer.

The meaning of this coefficient is very simple: “the number of ill-considered moves that can make a player at this level”. If you need a simple level for the initial stage of the game, which should be easily passed by any player, it is possible to balance the level of this coefficient equal to 0.9 or even 1. If you need difficult or very difficult level for the passage of which the player must exert maximum efforts and skills, balancing can be carried out with small or even zero deviation from the optimal strategy.

Performance

And finally, a few words about speed.

Bot we did part of the game engine, depending on the exhibited flag, the program either waits for the players, or the moves made by the bot.

The first version of the bot is quite slow for 1000 runs level of 30 moves took more than an hour, despite the fact that the test mode was turned off all graphical effects and the chips were moving between the cells instantly.

Since all game logic is tied to the rendering cycle, but it is limited 60ю frames per second, for acceleration of the tester, it was decided to disable vertical sync and “let go” of FPS. We are using LibGDX framework, in which it's done (maybe useful to someone):

cfg.vSyncEnabled = false;

cfg.foregroundFPS = 0;

cfg.backgroundFPS = 0;

new LwjglApplication(new YourApp(), cfg,);

After that, the bot smartly ran at speeds of nearly 1000 fps! For most levels, this is enough to make 1000 “runs” of less than 5 minutes. Honestly, it would be even faster, but this is a working set.

You can watch a video of the bot (sorry, when you record a video the FPS drops to about 120)

Results

In the end, have the boat tied to the game engine — no need to maintain separate code tester.

If in the future the game will add new mechanics, then the bot will be easy to teach them test — will only need to add in a level editor IDs for new tactics and enter the code in additional parameters in the analysis of moves.

The hardest, of course, to evaluate how the behavior of the bot matches the behavior of the average player. But it will be a weakness of any approach to the construction of an automatic tester, so testing on living people (especially the target audience) no one, of course, not canceled.

Комментарии

Отправить комментарий