Magic H. 264

H. 264 — a video compression standard. And he is omnipresent, it is used to compress video online, on Blu-ray, phones, surveillance cameras, drones, everywhere. All now use H. 264.

Not to mention the adaptability of H. 264. It was the result of 30 years work with a single purpose: reducing the required bandwidth to transmit quality video.

From a technical point of view it is very interesting. This article is superficially described details of operation of certain mechanisms of compression, I'll try not to bore with details. Besides, it is worth noting that the majority of the following technologies fair for video compression in General, not just for H. 264.

Why bother to compress anything?

Video in uncompressed form is a sequence of two-dimensional arrays containing information about the pixels of each frame. Thus a three-dimensional (2 spatial dimensions and 1 temporal) array of bytes. Each pixel is encoded by three bytes — one for each of the three primary colors (red, green, and blue).

1080p @ 60 Hz = 1920x1080x60x3 => ~370 MB/s data.

It would be almost impossible to use. Blu-ray disc-50GB could accommodate only about 2 minutes of video. Copying will also not easy. Even SSD there are problems with the write from memory to disk.

So Yes, compression is necessary.

Why is H. 264?

Be sure to answer this question. But first, I'll show you something. Take a look at the main page of Apple:

I saved the picture and give an example of 2 files:

the

-

the

- a PNG screenshot of the main page 1015 KB

the - 5 seconds H. 264 video at 60 fps the same page 175 KB

Um... what? File sizes seem confused.

No, all right. H. 264 video with 300 frames weighs 175 KB. A single frame from the video in PNG — 1015 KB.

It seems we keep 300 times more data in the video, but the resulting file weighs 5 times less. It turns out H. 264 is more efficient PNG 1500 times.

How is this possible, what is the reception?

And techniques very much! H. 264 uses all the techniques about which you might imagine (and a lot of which is not). Let's go over the main.

the

Rid of excess weight.

Imagine that you are preparing a car to race and you need to speed it up. What will you do first? You will get rid of excess weight. Suppose the car weighs one ton. You start to throw unnecessary parts... Back seat? Pfft... throw away. A subwoofer? We can do without music. Air conditioning? Not needed. Transmission? In Muso... wait, it is still useful.

Thus you will get rid of everything but the necessary.

This method of discarding the unnecessary areas is called data compression with losses. H. 264 encodes lossy, discarding the less important part, while preserving important.

PNG encodes lossless. This means that all the information is stored, pixel by pixel, and therefore the original image can be reconstructed from the encoded file to PNG.

Important part? As the algorithm can determine their importance in the frame?

There are several obvious cuts in the image. Maybe the upper right quarter of the picture is useless, then you can remove the corner and we fit in ¾ of the original weight. Now the car weighs 750 kg. Or cut edge of a certain width around the perimeter, important information is always in the middle. Yes it is, but H. 264 just does not do it.

What really makes H. 264?

H. 264, like all compression algorithms is lossy, reduces detail. Below, a comparison of images before and after getting rid of details.

You can see how compressed the image is gone the holes in the speaker grille for the MacBook Pro? If you do not bring, can not be overlooked. The image on the right weighs only 7% of the original and this despite the fact that compression in the traditional sense was not. Imagine a machine that weighs only 70 kg!

7%, wow! How is it possible to get rid of detail?

First, a little mathematics.

the

Information entropy

We come to the fun part! If you visited the computer science theory, it is possible to think about the concept of information entropy. Information entropy is the number of units to represent some data. Note that this is not the size of the data. This is the minimum number of units that you want to use to render all data elements.

For example, if data take one flip of a coin, the entropy will get 1 unit. If coin tosses 2, you will need 2 units.

Suppose that the coin is very strange — it is tossed 10 times and each time had the eagle. How would someone you told about this? It is unlikely something like OOOOOOOOOO, you would say "10 shots, all the eagles" — boom! You just squeezed information! Easy. I saved you from hours of tedious lectures. This, of course, a huge simplification, but you have converted the data into a short presentation with the same information content. That is, reduced redundancy. Informational entropy of the data is not affected — you only transformed the performance. This is called entropy coding, which is suitable for encoding any kind of data.

the

Frequency space

Now that we've dealt with the informational entropy, we proceed to transform the data. You can present data in fundamental systems. For example, if you use a binary code are 0 and 1. If you use the hexadecimal system, the alphabet will consist of 16 characters. Between the above systems, there is one-to-one connection, so you can easily transform one into the other. So far? Go ahead.

And imagine that you can present data that vary in space or time, in a completely different coordinate system. For example, the brightness of the image, instead of the coordinate system with x and y, we take the frequency system. Thus, on the axes are the frequencies freqX and freqY, such a representation is called the space frequency[Frequency domain representation]. And there are Teoremathat any data can be losslessly to provide in such a system at a sufficiently high freqX and freqY.

Okay, but what is freqX and freqY?

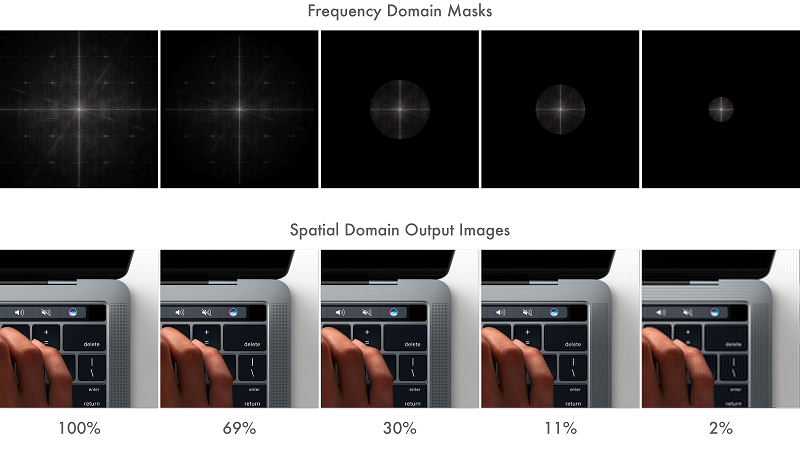

freqX and freqY just another basis in the coordinate system. As well as, you can move from binary system to hexadecimal, you can go from X-Y in freqX and freqY. Below depicts the transition from one system to another.

Small grid MacBook Pro contains high frequency information and is located in the region of high frequencies. So small details have a high frequency and gradual changes, such as color and brightness low. Everything in between stays in between.

In this representation, low-frequency details are closer to the center of the image, and high frequency corners.

so far, so good, but why do it?

Because now, you can take the image represented in the frequency intervals, and cut corners, in other words to apply the mask, thereby lowering the detail. And if you convert the image back to the familiar, you will notice that it remained similar to the original, but with less detail. As a result of this manipulation, we will save the place. By selecting the desired mask, you can control image detail.

Below familiar the laptop, but now applied to her, the circular masks.

Percentages are information entropy relative to the original image. If you do not zoom, the difference is not noticeable and at 2%! — the machine now weighs 20 pounds!

Thus need to get rid of weight. This process of compression with loss is called Quantanium.

That's impressive, what other methods exist?

the

Color processing

The human eye is not very well distinguish between close shades of color. It is possible to recognise the smallest difference in brightness, but not color. So, there must be a way of getting rid of unnecessary color information and save even more space.

In TV, the colors RGB is converted to YCbCr, where the Y component is luminance (essentially brightness black-and-white image), and Cb and Cr color components. RGB and YCbCr equivalents in terms of information entropy.

Why complicate things? RGB isn't that enough?

In the days of black-and-white TVs were the only component Y. the emergence of color TVs engineers faced the task of transfer of a color RGB image with a black and white. So instead of two channels for transmission, it was decided to encode the color components Cb and Cr, and pass them together with Y, and color TVs themselves will have to convert the color components and brightness in the usual RGB.

But here's the trick: the component of luminance is encoded at full resolution, the color components only in a quarter. And it can be neglected, because the eye/brain can not distinguish shades. Thus it is possible to reduce the image size in half with minimal differences. 2 times! The machine will weigh 10 kg!

This technology of coding image with reduced color resolution is called color sub-sampling. It is used widely for a long time and refers not only to H. 264.

This is the most significant technology to reduce size, while lossy compression. We managed to get rid of most of the detail and reducing color information in 2 times.

And you can more?

Yes. Cropping is only the first step. Up to this point we discussed as a separate frame. It's time to look at the compression time, where we have to work with a group of frames.

the

motion Compensation

H. 264 standard, which allows to compensate for movement.

motion Compensation? What is it?

Imagine you are watching a tennis match. The camera is fixed and shoots from a certain angle and the only thing that moves is the ball. How would you have coded it? You'd do that normally, right? A three-dimensional array of pixels, with two coordinates in space and one frame at a time, right?

But why? Most of the image is the same. Field mesh, the audience does not change, the only thing that moves is the ball. What if we define only one background image and one image of a ball moving on it. Not would save that much space? You see what I mean, right? Motion compensation?

And that's exactly what H. 264 does. H. 264 divides the image into macroblocks, typically 16 × 16 which are used to calculate the motion. One frame remains static, it is usually called I-frame [Intra frame], and contains all. Subsequent frames may be either P-frames [predicted], or B-frames [bi-directionally predicted]. In P-frames the motion vector is encoded for each macroblock on the basis of previous frames, so the decoder should use the previous frames, taking the last of the I-frames of the video and gradually adding changes in subsequent frames until the current one.

Even more interesting things with B-frames, in which the calculation is performed in both directions, on the basis of walking frames before and after them. Now you understand why the video in the beginning of the article weighs so little, it's only 3 the I-frame in which the macroblocks are torn.

With this technology encodes only the differences of motion vectors, thereby providing a high degree of compression of any video with the movements.

We have considered static and temporal compression. Using quantization, we many times reduced the size of the data, then use the color subsampling is still halved received, and now even with motion compensation achieved storing only 3 frames out of 300 that were originally in the review video.

Looks impressive. Now what?

Now we draw a line using the traditional entropy coding is lossless. Why not?

the

Entropy encoding

After the stages of compression with loss, I-frames contain redundant data. The motion vector of each of macroblocks in P-frames and B-frames a lot of the same information, as it often happens that they move identically, as can be seen in the initial video.

Such redundancy can be removed by entropy coding. And you can not worry about the data itself, as this is the standard technique for lossless compression, so it can be restored.

Now everything! Based on H. 264 are the aforementioned technologies. This is the standard techniques.

Excellent! But I are curious to find out how much it weighs now our car.

The original video was shot in a non-standard resolution 1232x1154. If you count, you get:

5 sec. @ 60 fps = 1232x1154x60x3x5 => 1.2 GB

Compressed video => 175 KB

If to correlate the result with the agreed vehicle weight of one ton, we get a weight equal to the 0.14 kg 140 grams!

Yes, it's magic!

Of course I'm in a very simplified form, described the result of a decade of research in this area. If you want to know more, Wikipedia page quite informative.

Комментарии

Отправить комментарий